The Ed: note in our last article “Beating Dead Horses” mentioned that statistical significance does not prove a contention and is not necessarily determinative. The Nature article “Scientists Rise Up Against Statistical Significance” referenced is important both for describing this real problem in a multitude of research and for the more than 800 scientists from 50 countries who want us to know about it.

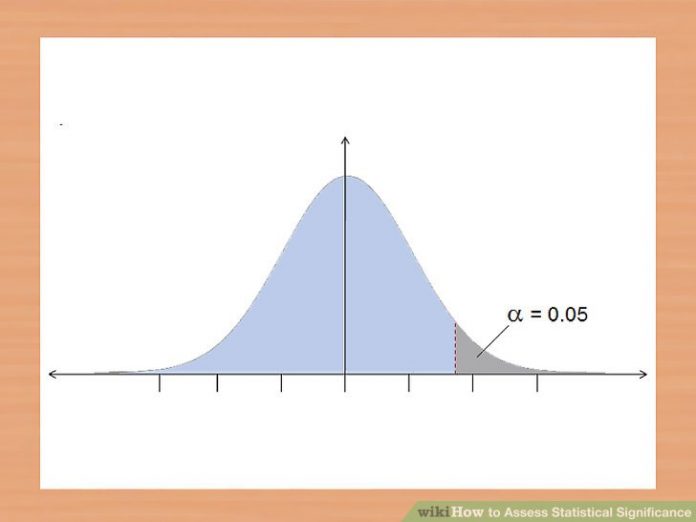

A finding of statistical significance in a study generally means that the association being examined has at least a 95% chance of being meaningful. That is not the same as being true in the sense of causative or even necessarily concurrent. In the same way, not finding an association significant just means that in that particular study there appeared to be only a less than 5% chance that there really is one. (This can be phrased in other ways, such as in the Nature article. For an entertaining but serious discussion on how correlation does not equal causation, see Dr. Przebinda’s “Spuriouser and Spuriouser”.)

Too often, the 95% “confidence interval” justifies authors claiming that they’ve more or less proven the hypothesis they designed the research to test. (Confirming theories is a lot sexier, more publishable, and better for career advancement than failing to.) Yet there is far more value in disproving the countless ideas that scientists come up with because narrowing down a range of possibilities is what ultimately leads to consensus about the best ones.

The big problem is that the majority of studies published, especially in the social sciences (yes, I’m talking to you “public health researchers”—because that’s not medical science), have not been replicated. And when the attempts are made, most can’t be. (We discussed this in 2015 in “Trouble in the Ivory Tower”.) Invalid significance is (only) one reason.

A “significant” finding is also just the tail end of the formal process of research design, and is utterly dependent on the quality of the preceding steps. They begin with a theory about something contributing or causing something else, whether mechanical, biological, geological, psychological or social. A question has to be posed about the theory which, if testable, could shed light on the theory’s validity. Then a way to answer that question experimentally has to be designed. The design has to be able to be carried out in a way that observing it does not influence the results. Once obtained, the results (data) from the experimental test have to be understood. Here is where statistical analysis comes in, with various mathematical tests to put them together in meaningful ways. With that comes tests for “significance”.

A study can go wrong at any step of that sequence, from the logic of the theory to the kind of question posed to the way it is experimentally tested to the way the results are interpreted. As even scientists, despite popular belief, are human—and rather egotistical—there are usually problems that can be identified. The proper course of science is to critique and question all those steps in order to do a better job of ascertaining the utility of the approach and the accuracy of the findings. And there are always issues worth identifying.

That is, unless the researchers are publishing studies with biases that their professions and publishers share, with agendas that politicians and their media fellow travelers want to promote. Which, of course, includes work purporting to show that guns are dangerous to society. That lane is where DRGO’s counter-insurgency as a scientific watchdog group fights back for the right to keep and bear arms.

We want readers to understand what to look for and how we look at such research. In 2016 we published a piece “Critiquing the ‘Research’ Criticizing Guns” listing a number of ways we can get fooled into accepting experts’ claims. This is worth another read, and thanks to the Nature article, we’re adding another item to that list: Insignificant Significance. You can find the whole list via our home page set of “Positions & Resources”, under “Gun Research”, which directs readers to our PDF “Reading ‘Gun Violence’ Research Critically”.

Here’s the whole list:

1. Personal bias: Antipathy toward gun ownership is often evident in the language of the introduction and summary of the work. It may arise from the authors’ personal histories or fit their career arcs. Hoplophobia is often present.

2. Guns as independent risk factors: Studies that treat guns as a causative agent (e.g., the “guns as viruses” meme). Then a hypothesis is proposed and analytic approaches are chosen that reinforce the notion.

3. Selection bias and cherry-picked data: Choices are always made about what data will be sought, from what sources and over what time periods, and then how it should be interpreted. Smart academicians (and they are very smart) can skew outcomes from start to finish. Scrupulous ones don’t.

4. Arbitrary analogies: Comparing deaths from gunshot to entirely different phenomena (e.g., vehicle accident deaths). Using flawed premises and logic that have no relationship to the ways that guns work and can harm (e.g., that we must have “smart guns”, because autos have built-in safety devices).

5. Blame mongering: Holding responsible people other than the ones in the wrong who wrongly use guns at the wrong times.

6. Diversionary tactics: Setting up straw men, such as proclaiming that being shot by someone you know is more likely than being attacked by a terrorist.

7. False attribution: Depicting correlation as causation, a near universal tactic. Presenting gunshot deaths and injuries as consequences intrinsic to the existence of guns, rather than as aberrations from normal gun use and users.

8. Data Withholding: Refusing to share data avoids criticism, probably when it is most merited. Charging for access to articles behind pay walls is another, commercialized way to limit criticism.

9. Insignificant Significance: A significant result only demonstrates a > premises and methodology chosen, is accurate. It does not prove something is or is not true, nor does it negate criticism of any part of the study

That is quite a list of potholes on the road to enlightenment. But we’ll keep steering ahead even while navigating the bumps.

.

.

— DRGO Editor Robert B. Young, MD is a psychiatrist practicing in Pittsford, NY, an associate clinical professor at the University of Rochester School of Medicine, and a Distinguished Life Fellow of the American Psychiatric Association.

All DRGO articles by Robert B. Young, MD